Logo A/B Testing: Simple Frameworks That Actually Work

Effective branding is the cornerstone of a successful business, and a company’s logo plays an outsized role in shaping first impressions. Whether you’re launching a startup or rebranding an established business, the logo must resonate with your target audience. But how do you know which logo design will make the strongest impact? The answer lies in logo A/B testing — a data-driven way to determine the best logo by testing different versions with real users.

TL;DR

Logo A/B testing allows businesses to compare multiple logo designs in real-world conditions to determine which is more effective. It involves testing different variants with specific user segments and analyzing metrics like engagement, recall, and preference. Simple A/B testing frameworks can eliminate guesswork and lead to branding decisions grounded in actual user behavior. This article walks through the methods and tools involved in running successful logo A/B tests.

Why Logo A/B Testing Matters

Choosing a logo is a weighty decision. It affects how people perceive your business, how they remember it, and how likely they are to engage with it. While graphic designers and brand experts can offer insights, data often speaks louder than opinions. A data-backed logo selection process ensures that the final design resonates more effectively with its intended audience.

Some key objectives of logo A/B testing include:

- Improving brand recognition

- Increasing trust and credibility

- Enhancing customer engagement

- Validating creative decisions with tangible results

Instead of relying on internal bias or assumptions, companies can run a structured A/B test to get actionable feedback on their logo choices.

Basic Frameworks for Logo A/B Testing

There are several testing models you can use, depending on your resources, target audience, and goals. Below are a few simple but effective frameworks that actually work:

1. Direct Preference Testing

This is the most straightforward method. Present two or more logo variations to users and ask them which one they prefer. This can be done through online surveys, focus groups, or even social media polls.

Steps include:

- Create 2-3 logo variants with clear stylistic differences.

- Use tools like Google Surveys, SurveyMonkey, or UsabilityHub to gather responses.

- Ask simple questions: “Which logo do you prefer and why?”

- Analyze open-ended feedback to understand design themes that resonate.

Pros: Fast, low-cost, and easy to implement.

Cons: Responses may be subjective and influenced by users’ personal taste.

2. Split URL Testing

If you’re redesigning your brand, consider using two separate landing pages, each featuring a different logo. Drive equal traffic to both and measure engagement levels like bounce rate, time on page, and conversion rate.

Steps include:

- Design two identical landing pages, with logos being the only difference.

- Use A/B testing tools like Google Optimize, Unbounce, or VWO.

- Monitor key metrics to determine which version performs better.

Pros: Measures actual behavior instead of opinions.

Cons: Requires more setup and analytics expertise.

3. Ad-Based Testing

Run online ads (Google Ads, Facebook Ads) with otherwise identical creatives but different logos. Use CTR (click-through rate) as the main performance metric.

Steps include:

- Design a simple ad campaign with two creative variants.

- Keep all elements the same except for the logo.

- Analyze CTR and engagement after a few hundred impressions.

Pros: Fast feedback from real users.

Cons: Budget required and doesn’t give deeper insight into reasons behind preference.

Audience Segmentation for Better Insights

Not all feedback is created equal. Testing logos with everyone might yield skewed results. Ideally, segment your audience to test logos among specific user types, such as:

- New customers vs. returning customers

- Demographics like age or region

- Device users: mobile vs. desktop

This will help you understand whether a logo resonates differently among market segments, which can guide branding strategies such as regional adaptations or campaigns targeting niche demographics.

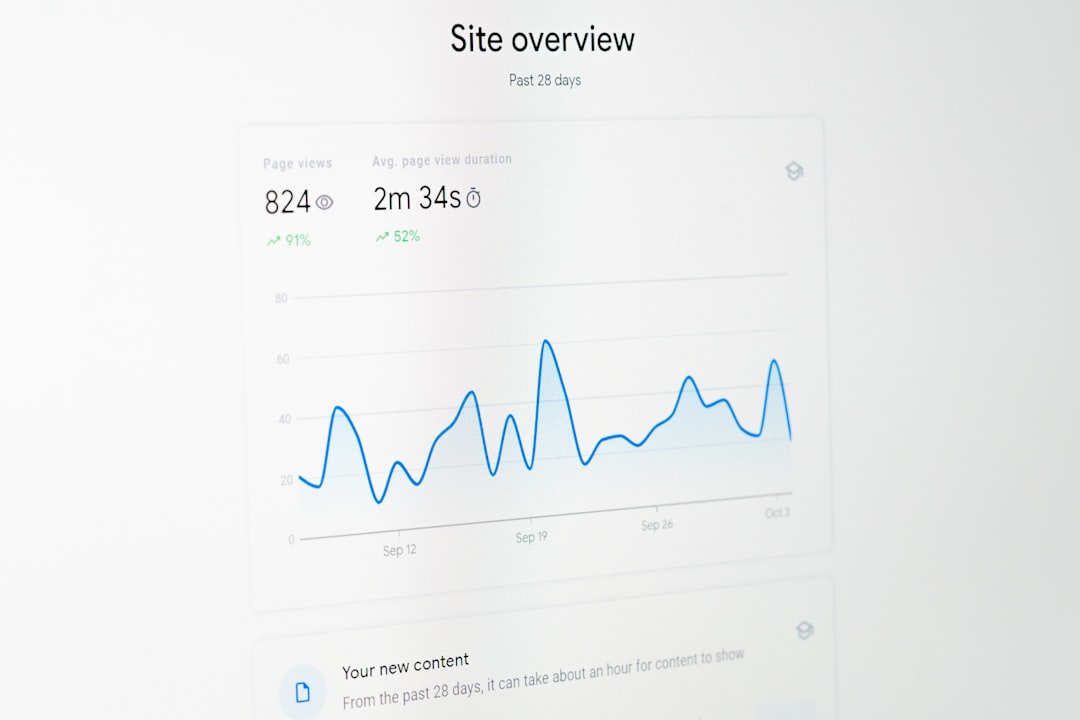

Using Analytics and UX Tools

Effective logo A/B testing relies on proper tracking and data interpretation. Beyond surveys and engagement metrics, integrate these tools for deeper understanding:

- Heatmaps: Use Hotjar or Crazy Egg to see if logo placement affects user interaction.

- Eye tracking: Advanced tools like Attention Insight simulate eye movement to show which logo attracts more attention.

- Session recordings: Observe how users interact with pages using different logos.

- Data analysis platforms: Leverage tools like Analytica to process complex datasets, uncover trends, and gain actionable insights for your branding decisions.

Common Pitfalls to Avoid

Testing logos may sound simple, but pitfalls can skew results. Here are common mistakes and how to avoid them:

- Testing too many variants at once: Simplify to 2-3 options to isolate variables.

- Relying on vanity metrics: Likes and shares don’t always translate to engagement or trust.

- Running tests too briefly: Ensure you have statistically significant data before drawing conclusions.

- Ignoring qualitative feedback: Combine metrics with user comments for better insights.

When to Run Logo A/B Tests

Here are key stages when logo A/B testing can bring the most value:

- Startup launch: Choose a winning first impression logo.

- Rebranding: Ensure current users connect with the new identity.

- Entering new markets: Test for cultural or regional preferences.

Being strategic about the timing of your tests allows you to gather insights when they’ll have the most impact.

Conclusion

In an era where branding plays a pivotal role in customer decisions, logo A/B testing is no longer a luxury — it’s a necessity. By taking a data-driven approach, companies can escape subjective guesswork and make informed branding choices that convert. With simple frameworks like preference testing, split-URL testing, and ad analysis, businesses of all sizes can intelligently refine their visual identity. The best logo isn’t always the one that looks the sleekest to your design team — it’s the one your users respond to the most.

FAQs

- Q: How long should a logo A/B test run?

A: Ideally, run the test until you’ve reached statistical significance—which may range from several days to a few weeks depending on traffic. - Q: Is it okay to test more than two logos?

A: Yes, but it’s best to limit to two or three variants to maintain test clarity and minimize complexity in data analysis. - Q: What metrics are most important for logo testing?

A: Focus on a mix of quantitative metrics (CTR, bounce rate) and qualitative insights (user preference, feedback). - Q: Should I test the logo in isolation or in-context?

A: Always aim for in-context testing—users must see the logo within a real or realistic environment to provide valid results. - Q: Can small businesses afford to run logo A/B tests?

A: Absolutely. Many tools like Google Forms, Typeform, and Facebook Ads make it both accessible and affordable.